Throughout this project, a couple of classmates greatly helped me with testing my project and spotting glitches or awkward hiccups in different aspects of my operator setup, as well as offering ideas on how to fix or improve certain details.

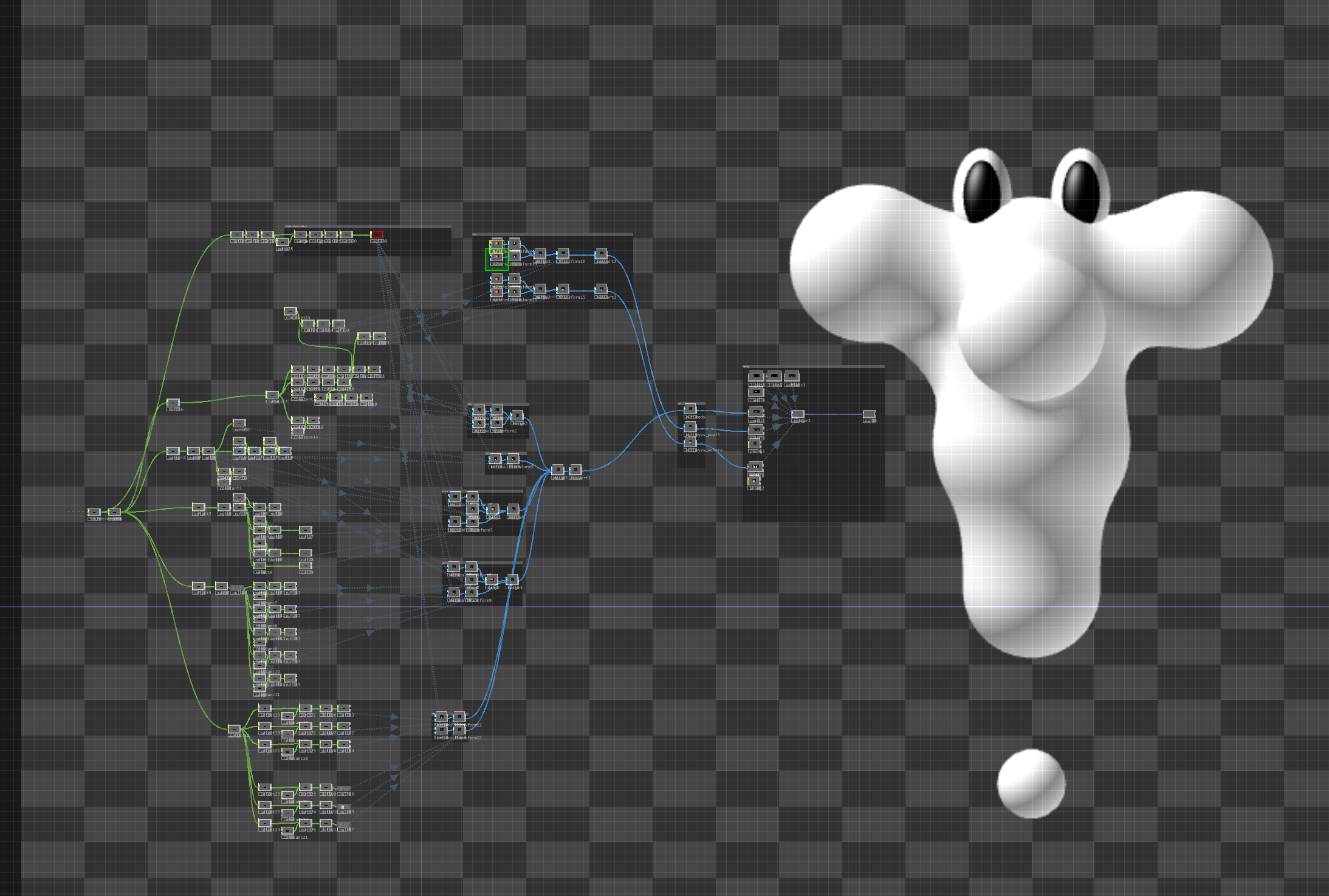

Nathan Kipka: suggested several technical workarounds and tricks for my operator setup to remove glitches

Casper Chappell: main featured tester in the video, tested the project throughout several initial passes

Japey Howarth: informed me about the potential use of a "feedback" process in TouchDesigner that I considered using, also helped with testing my project in the hallway